A new version of this tutorial is here.

This one will be removed eventually.

One of the simplest and most useful effects that isn’t already present in Unity is object outlines.

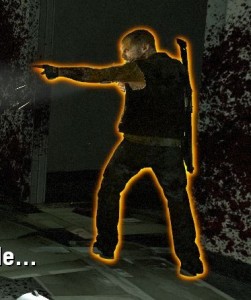

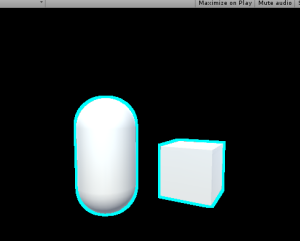

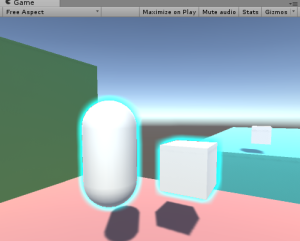

There are a couple of ways to do this, and the Unity Wiki covers one of these. But, the example demonstrated in the Wiki cannot make a “blurred” outline, and it requires smoothed normals for all vertices. If you need to have an edge on one of your outlined objects, you will get the following result:

While the capsule on the left looks fine, the cube on the right has artifacts. And to the beginners: the solution is NOT to apply smoothing groups or smooth normals out, or else it will mess with the lighting of the object. Instead, we need to do this as a simple post-processing effect. Here are the basic steps:

- Render the scene to a texture(render target)

- Render only the selected objects to another texture, in this case the capsule and box

- Draw a rectangle across the entire screen and put the texture on it with a custom shader

- The pixel/fragment shader for that rectangle will take samples from the previous texture, and add color to pixels which are near the object on that texture

- Blur the samples

Step 1: Render the scene to a texture

First things first, let’s make our C# script and attach it to the camera gameobject:

using UnityEngine;

using System.Collections;

public class PostEffect : MonoBehaviour

{

Camera AttachedCamera;

public Shader Post_Outline;

void Start ()

{

AttachedCamera = GetComponent();

}

void OnRenderImage(RenderTexture source, RenderTexture destination)

{

}

}

OnRenderImage() works as follows: After the scene is rendered, any component attached to the camera that is drawing receives this message, and a rendertexture containing the scene is passed in, along with a rendertexture that is to be output to, but the scene is not drawn to the screen. So, that’s step 1 complete.

Step 2: Render only the selected objects to another texture

There are again many ways to select certain objects to render, but I believe this is the cleanest way. We are going to create a shader that ignores lighting or depth testing, and just draws the object as pure white. Then we re-draw the outlined objects, but with this shader.

//This shader goes on the objects themselves. It just draws the object as white, and has the "Outline" tag.

Shader "Custom/DrawSimple"

{

SubShader

{

ZWrite Off

ZTest Always

Lighting Off

Pass

{

CGPROGRAM

#pragma vertex VShader

#pragma fragment FShader

struct VertexToFragment

{

float4 pos:SV_POSITION;

};

//just get the position correct

VertexToFragment VShader(VertexToFragment i)

{

VertexToFragment o;

o.pos=mul(UNITY_MATRIX_MVP,i.pos);

return o;

}

//return white

half4 FShader():COLOR0

{

return half4(1,1,1,1);

}

ENDCG

}

}

}

Now, whenever the object is drawn with this shader, it will be white. We can make the object get drawn by using Unity’s Camera.RenderWithShader() function. So, our new camera code needs to render the objects that reside on a special layer, rendering them with this shader, to a texture. Because we can’t use the same camera to render twice in one frame, we need to make a new camera. We also need to handle our new RenderTexture, and work with binary briefly.

Our new C# code is as follows:

using UnityEngine;

using System.Collections;

public class PostEffect : MonoBehaviour

{

Camera AttachedCamera;

public Shader Post_Outline;

public Shader DrawSimple;

Camera TempCam;

// public RenderTexture TempRT;

void Start ()

{

AttachedCamera = GetComponent();

TempCam = new GameObject().AddComponent();

TempCam.enabled = false;

}

void OnRenderImage(RenderTexture source, RenderTexture destination)

{

//set up a temporary camera

TempCam.CopyFrom(AttachedCamera);

TempCam.clearFlags = CameraClearFlags.Color;

TempCam.backgroundColor = Color.black;

//cull any layer that isn't the outline

TempCam.cullingMask = 1 << LayerMask.NameToLayer("Outline");

//make the temporary rendertexture

RenderTexture TempRT = new RenderTexture(source.width, source.height, 0, RenderTextureFormat.R8);

//put it to video memory

TempRT.Create();

//set the camera's target texture when rendering

TempCam.targetTexture = TempRT;

//render all objects this camera can render, but with our custom shader.

TempCam.RenderWithShader(DrawSimple,"");

//copy the temporary RT to the final image

Graphics.Blit(TempRT, destination);

//release the temporary RT

TempRT.Release();

}

}

Bitmasks

The line:

TempCam.cullingMask = 1 << LayerMask.NameToLayer("Outline");

means that we are shifting the value (Decimal: 1, Binary: 00000000000000000000000000000001) a number of bits to the left, in this case the same number of bits as our layer's decimal value. This is because binary value "01" is the first layer and value "010" is the second, and "0100" is the third, and so on, up to a total of 32 layers(because we have 32 bits up here). Unity uses this order of bits to mask what it draws, in other words, this is a Bitmask.

So, if our “Outline” layer is 8, to draw it, we need a bit in the 8th spot. We shift a bit that we know to be in the first spot over to the 8th spot. Layermask.NameToLayer() will return the decimal value of the layer(8), and the bit shift operator will shift the bits that many over(8).

To the beginners: No, you cannot just set the layer mask to “8”. 8 in decimal is actually 1000, which, when doing bitmask operations, is the 4th slot, and would result in the 4th layer being drawn.

Q: Why do we even do bitmasks?

A: For performance reasons.

Moving along…

Make sure that at render time, the objects you need outlined are on the outline layer. You could do this by changing the object’s layer in LateUpdate(), and setting it back in OnRenderObject(). But out of laziness, I’m just setting them to the outline layer in the editor.

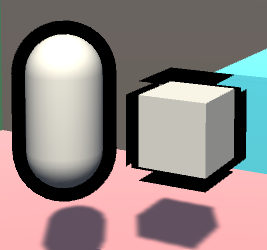

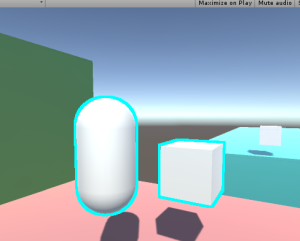

The above screenshot shows what the scene should look like with our code. So that’s step 2; we rendered those objects to a texture.

Step 3: Draw a rectangle to the screen and put the texture on it with a custom shader.

Except that’s already what’s going on in the code; Graphics.Blit() copies a texture over to a rendertexture. It draws a full-screen quad(vertex coordinates 0,0, 0,1, 1,1, 1,0) an puts the texture on it.

And, you can pass in a custom shader for when it draws this.

So, let’s make a new shader:

Shader "Custom/Post Outline"

{

Properties

{

//Graphics.Blit() sets the "_MainTex" property to the texture passed in

_MainTex("Main Texture",2D)="black"{}

}

SubShader

{

Pass

{

CGPROGRAM

sampler2D _MainTex;

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct v2f

{

float4 pos : SV_POSITION;

float2 uvs : TEXCOORD0;

};

v2f vert (appdata_base v)

{

v2f o;

//Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders.

o.pos = mul(UNITY_MATRIX_MVP,v.vertex);

//Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used.

o.uvs = o.pos.xy / 2 + 0.5;

return o;

}

half4 frag(v2f i) : COLOR

{

//return the texture we just looked up

return tex2D(_MainTex,i.uvs.xy);

}

ENDCG

}

//end pass

}

//end subshader

}

//end shader

If we put this shader onto a new material, and pass that material into Graphics.Blit(), we can now re-draw our rendered texture with our custom shader.

using UnityEngine;

using System.Collections;

public class PostEffect : MonoBehaviour

{

Camera AttachedCamera;

public Shader Post_Outline;

public Shader DrawSimple;

Camera TempCam;

Material Post_Mat;

// public RenderTexture TempRT;

void Start ()

{

AttachedCamera = GetComponent();

TempCam = new GameObject().AddComponent();

TempCam.enabled = false;

Post_Mat = new Material(Post_Outline);

}

void OnRenderImage(RenderTexture source, RenderTexture destination)

{

//set up a temporary camera

TempCam.CopyFrom(AttachedCamera);

TempCam.clearFlags = CameraClearFlags.Color;

TempCam.backgroundColor = Color.black;

//cull any layer that isn't the outline

TempCam.cullingMask = 1 << LayerMask.NameToLayer("Outline");

//make the temporary rendertexture

RenderTexture TempRT = new RenderTexture(source.width, source.height, 0, RenderTextureFormat.R8);

//put it to video memory

TempRT.Create();

//set the camera's target texture when rendering

TempCam.targetTexture = TempRT;

//render all objects this camera can render, but with our custom shader.

TempCam.RenderWithShader(DrawSimple,"");

//copy the temporary RT to the final image

Graphics.Blit(TempRT, destination,Post_Mat);

//release the temporary RT

TempRT.Release();

}

}

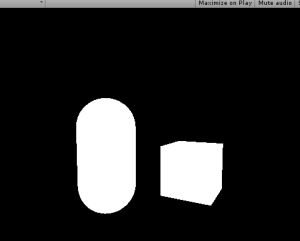

Which should lead to the results above, but the process is now using our own shader.

Step 4: add color to pixels which are near white pixels on the texture.

For this, we need to get the relevant texture coordinate of the pixel we are rendering, and look up all adjacent pixels for existing objects. If an object exists near our pixel, then we should draw a color at our pixel, as our pixel is within the outlined radius.

Shader "Custom/Post Outline"

{

Properties

{

_MainTex("Main Texture",2D)="black"{}

}

SubShader

{

Pass

{

CGPROGRAM

sampler2D _MainTex;

//_TexelSize is a float2 that says how much screen space a texel occupies.

float2 _MainTex_TexelSize;

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct v2f

{

float4 pos : SV_POSITION;

float2 uvs : TEXCOORD0;

};

v2f vert (appdata_base v)

{

v2f o;

//Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders.

o.pos = mul(UNITY_MATRIX_MVP,v.vertex);

//Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used.

o.uvs = o.pos.xy / 2 + 0.5;

return o;

}

half4 frag(v2f i) : COLOR

{

//arbitrary number of iterations for now

int NumberOfIterations=9;

//split texel size into smaller words

float TX_x=_MainTex_TexelSize.x;

float TX_y=_MainTex_TexelSize.y;

//and a final intensity that increments based on surrounding intensities.

float ColorIntensityInRadius;

//for every iteration we need to do horizontally

for(int k=0;k<NumberOfIterations;k+=1)

{

//for every iteration we need to do vertically

for(int j=0;j<NumberOfIterations;j+=1)

{

//increase our output color by the pixels in the area

ColorIntensityInRadius+=tex2D(

_MainTex,

i.uvs.xy+float2

(

(k-NumberOfIterations/2)*TX_x,

(j-NumberOfIterations/2)*TX_y

)

).r;

}

}

//output some intensity of teal

return ColorIntensityInRadius*half4(0,1,1,1);

}

ENDCG

}

//end pass

}

//end subshader

}

//end shader

And then, if an object exists under the pixel, discard the pixel:

//if something already exists underneath the fragment, discard the fragment.

if(tex2D(_MainTex,i.uvs.xy).r>0)

{

discard;

}

And finally, add a blend mode to the shader:

Blend SrcAlpha OneMinusSrcAlpha

And the resulting shader:

Shader "Custom/Post Outline"

{

Properties

{

_MainTex("Main Texture",2D)="white"{}

}

SubShader

{

Blend SrcAlpha OneMinusSrcAlpha

Pass

{

CGPROGRAM

sampler2D _MainTex;

//_TexelSize is a float2 that says how much screen space a texel occupies.

float2 _MainTex_TexelSize;

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct v2f

{

float4 pos : SV_POSITION;

float2 uvs : TEXCOORD0;

};

v2f vert (appdata_base v)

{

v2f o;

//Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders.

o.pos = mul(UNITY_MATRIX_MVP,v.vertex);

//Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used.

o.uvs = o.pos.xy / 2 + 0.5;

return o;

}

half4 frag(v2f i) : COLOR

{

//arbitrary number of iterations for now

int NumberOfIterations=9;

//split texel size into smaller words

float TX_x=_MainTex_TexelSize.x;

float TX_y=_MainTex_TexelSize.y;

//and a final intensity that increments based on surrounding intensities.

float ColorIntensityInRadius;

//if something already exists underneath the fragment, discard the fragment.

if(tex2D(_MainTex,i.uvs.xy).r>0)

{

discard;

}

//for every iteration we need to do horizontally

for(int k=0;k<NumberOfIterations;k+=1)

{

//for every iteration we need to do vertically

for(int j=0;j<NumberOfIterations;j+=1)

{

//increase our output color by the pixels in the area

ColorIntensityInRadius+=tex2D(

_MainTex,

i.uvs.xy+float2

(

(k-NumberOfIterations/2)*TX_x,

(j-NumberOfIterations/2)*TX_y

)

).r;

}

}

//output some intensity of teal

return ColorIntensityInRadius*half4(0,1,1,1);

}

ENDCG

}

//end pass

}

//end subshader

}

//end shader

Step 5: Blur the samples

Now, at this point, we don’t have any form of blur or gradient. There also exists the problem of performance: If we want an outline that is 3 pixels thick, there are 3×3, or 9, texture lookups per pixel. If we want to increase the outline radius to 20 pixels, that is 20×20, or 400 texture lookups per pixel!

We can solve both of these problems with our upcoming method of blurring, which is very similar to how most gaussian blurs are performed. It is important to note that we are not doing a gaussian blur in this tutorial, as the method of weight calculation is different. I recommend that if you are experienced with shaders, you should do a gaussian blur here, but color it one single color.

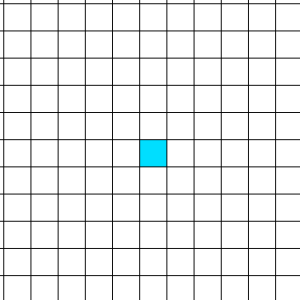

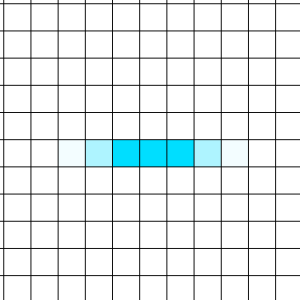

We start with a pixel:

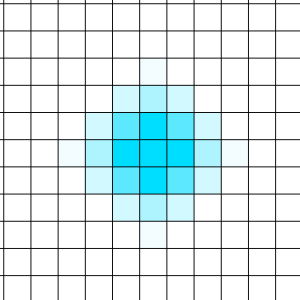

Which we can sample all neighboring pixels in a circle and weight them based on their distance to the sample input:

Which looks really good! But again, that’s a lot of samples once you reach a higher radius.

Fortunately, there’s a cool trick we can do.

We can blur the texel horizontally to a texture….

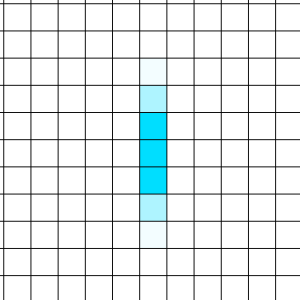

And then read from that new texture, and blur vertically…

Which leads to a blur as well!

And now, instead of an exponential increase in samples with radius, the increase is now linear. When the radius is 5 pixels, we blur 5 pixels horizontally, then 5 pixels vertically. 5+5 = 10, compared to our other method, where 5×5 = 25.

To do this, we need to make 2 passes. Each pass will function like the shader code above, but remove one “for” loop. We also don’t bother with the discarding in the first pass, and instead leave it for the second.

The first pass also uses a single channel for the fragment shader; No colors are needed at that point.

Because we are using two passes, we can’t simply use a blend mode over the existing scene data. Now, we need to do blending ourselves, instead of leaving it to the hardware blending operations.

Shader "Custom/Post Outline"

{

Properties

{

_MainTex("Main Texture",2D)="black"{}

_SceneTex("Scene Texture",2D)="black"{}

}

SubShader

{

Pass

{

CGPROGRAM

sampler2D _MainTex;

//_TexelSize is a float2 that says how much screen space a texel occupies.

float2 _MainTex_TexelSize;

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct v2f

{

float4 pos : SV_POSITION;

float2 uvs : TEXCOORD0;

};

v2f vert (appdata_base v)

{

v2f o;

//Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders.

o.pos = mul(UNITY_MATRIX_MVP,v.vertex);

//Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used.

o.uvs = o.pos.xy / 2 + 0.5;

return o;

}

half frag(v2f i) : COLOR

{

//arbitrary number of iterations for now

int NumberOfIterations=20;

//split texel size into smaller words

float TX_x=_MainTex_TexelSize.x;

//and a final intensity that increments based on surrounding intensities.

float ColorIntensityInRadius;

//for every iteration we need to do horizontally

for(int k=0;k0)

{

return tex2D(_SceneTex,float2(i.uvs.x,1-i.uvs.y));

}

//for every iteration we need to do vertically

for(int j=0;j<NumberOfIterations;j+=1)

{

//increase our output color by the pixels in the area

ColorIntensityInRadius+= tex2D(

_GrabTexture,

float2(i.uvs.x,1-i.uvs.y)+float2

(

0,

(j-NumberOfIterations/2)*TX_y

)

).r/NumberOfIterations;

}

//this is alpha blending, but we can't use HW blending unless we make a third pass, so this is probably cheaper.

half4 outcolor=ColorIntensityInRadius*half4(0,1,1,1)*2+(1-ColorIntensityInRadius)*tex2D(_SceneTex,float2(i.uvs.x,1-i.uvs.y));

return outcolor;

}

ENDCG

}

//end pass

}

//end subshader

}

//end shader

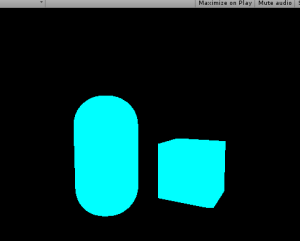

And the final result:

Where you can go from here

First of all, the values, iterations, radius, color, etc are all hardcoded in the shader. You can set them as properties to be more code and designer friendly.

Second, my code has a falloff based on the number of filled texels in the area, and not the distance to each texel. You could create a gaussian kernel table and multiply the blurs with your values, which would run a little bit faster, but also remove the artifacts and uneven-ness you can see at the corners of the cube.

Don’t try to generate the gaussian kernel at runtime, though. That is super expensive.

I hope you learned a lot from this! If you have any questions or comments, please leave them below.

Thanks a lot for the useful information! Keep it up!

LikeLike

Hi! This is the first time I’ve found this explained in such detail, thanks!

I still don’t fully understand the shader’s code though, and I can’t get to work the blurred version, I’m getting this http://i.imgur.com/SwPubj3.png.

It seems that the alpha blending part of the code is not working, but I can’t find a way to fix it.

Any ideas? Thanks!

LikeLike

In the final version of the shader(which I think is what you’re using), I removed the alpha blending line, and instead the shader just makes the color equal to whatever the other texture was. So, you need to pass in the existing scene texture to _SceneTex. You can do this from C# by setting the material’s texture via Post_Mat.SetTexture(“_SceneTex”,TheSceneTexture);.

To make that scene texture you’ll likely have to make a copy of TempRT before running the outline shader, and pass that in as the last parameter.

LikeLike

Thanks for your answer! I’ve just tried doing that, but I can’t get it to work, I’m pretty sure that the only thing missing is how to get the current scene texture, but it seems that I’m doing it wrong. I’ve tried creating a copy of the tempRT, just as you explained, but it’s still rendering a black scene.

LikeLike

I’m having the same problems as Paul. My best guess is that there is a missing function that blends the scene texture with the main texture inside the shader code. Passing in the SceneTexture doesn’t change anything on the final rendered image. Please advise. 🙂

LikeLike

Superb tutorial, thanks for making it!

However, I am having the same issue as the other two. I experimented with the .SetTexture solution but ran into the problem I had to flip the scene RT on the Y axis, and despite my best efforts, it quickly turned into a mess. Any input would be appreciated.

LikeLike

Are you insane to create new render texture each frame? the cpu and memory just wasted. the only case it’s required is the screen resolution changed

LikeLike

Definitely a good point. I’ll have to look into optimizing that part once I fix up the tutorial.

LikeLike

Please do it today I’m begging you! I really need this shader with blur, but don’t know how to fix it.

LikeLike

afaict Unity actually suggests using this exact method for temporary render textures, since its using an object pool behind the scenes, and it allows that pool to be shared between other fullscreen image fx

also will thank you so much for this tutorial, it was a HUGE help for Overland!

LikeLike

I tried to follow your tutorial but I only get a black screen. I’m using Unity 5.3.4f1

LikeLike

Got an outline working but i cant get your tip about using Post_Mat.SetTexture(“_SceneTex”,TheSceneTexture); to render the scene to work.

LikeLiked by 1 person

Hey, also getting just a black Screen on Unity 5.6.1f1, how’d you fix it?

LikeLike

Ok, So i got the scene working now, but somehow your shader only works with conjunction with my old, “wrong” outline shader that offets normals and stuff. http://i.imgur.com/HLCxwhk.png the plain cube is using unity default shader. To get the scene working I added this Post_Mat.SetTexture(“_SceneTex”, source); before TempCamp.RenderWithShader… Then in shader i added

scene = (1 – ColorIntensityInRadius) * scene;

//output some intensity of teal

return ColorIntensityInRadius*half4(1,0,0,1) + scene;

where scene is half4 scene = tex2D(_SceneTex, float2(i.uvs.x, i.uvs.y));

LikeLike

1.5 years later, still relevant. Thank you so much!

This solution is what worked for me, Unity 2017.3.0f3. (After deleting all the return characters and putting in new ones in the shader because copying and pasting from the website ‘corrupts’ it all…. ugh)

Keep in mind the author of this fix above renamed some variables.

LikeLike

how to i change the color?

LikeLike

Hi,

Thanks for the write up.

For most part, this works great! Unfortunately it doesn’t work in a WebGL build for me. The log says that the shader is incompatible because it doesn’t have a vertex shader pass.

I don’t know a thing about shader code, but would it be possible to make this compatible with WebGL?

Am I also right in thinking that this only works in Forward Rendering?

Thanks,

Matt

LikeLike

Hey there,

thanks for the tutorial, helped me a lot and was actually the first useful explanation on the topic i could find.

anyway, its working perfectly fine in the unity editor, but when i export to webgl the screen turns pink when the shader should do its thing.

I guess this is a compability issue with webgl (wouldnt be the first one…) or is there anything else i may have done wrong? i added the shaders to the always include list in the graphics settings.

any ideas?

LikeLike

Hey Will,

Fantastic resource! I’ll echo some of the above sentiments on thoughts for WebGL support — it only renders the Simple pass. I was able to get it working fine in editor by trying a different approach to the Blit. This keeps the screen from going black, but then the outline will no longer work (in WebGL).

Graphics.Blit(TempRT, source, Post_Mat);

Graphics.Blit(source, destination);

Any thoughts are appreciated!

LikeLike

Scratch that! Replacing the Blit with the lines above + changing the RenderTexture format to Default fixed it — R8 is unsupported in WebGL.

RenderTexture TempRT = new RenderTexture(source.width, source.height, 0, RenderTextureFormat.Default);

Thanks again for the great resource 🙂

LikeLike

For anyone having problems having a black screen with the blurred version.

You have to set the texture like this http://imgur.com/8HXwxxp.png in the OnRenderImage method.

Afterwards you will see that everything is inverted there for you have to remove these http://i.imgur.com/cFzTvGH.png in the outline shade in order to remove the invertion.

Final result should be http://i.imgur.com/yNn1KBl.png

Really awesome tutorial Will Weissman this helped me a lot.

LikeLike

❤ Man! You rocks!

Thanks for solve that problem!

LikeLike

Useful indeed. Thanks for sharing.

LikeLike

Great resource and information on how to accomplish outlining in your own project.

We need more people like you, that take the time to provide this valuable information to the community. Very much appreciated.

LikeLike

Great informative post! Thank you for sharing.

If anybody wants to implement this on Steam VR, simply put the post effect script on Camera(eye). In my case the last rendered image was flipped. I simply attached one more SteamVR_CameraFlip script to Camera(eye). I hope this helps someone out there. I made a short post about it, if anybody would need a bit more information: https://denizbicer.github.io/2017/02/16/Object-Outlines-at-Steam-VR-with-Unity-5.html

LikeLike

[…] have found this amazing tutorial to create outlines to objects. It works like a charm in a Unity project, where there is only one […]

LikeLiked by 1 person

Thank you for this great explanation! So helpful!

LikeLike

Have you a suggestion on how to achieve the occlusion of the outline if an object is in front of the outlined one?

Thanks!

LikeLike

Hey I’m trying to do the same thing here.

How to pass the depth buffer from the camera rendering the entire scene to the one rendering the highlighted objects white on black? (which would still lead to the issue of flickering, but at least that would bring me one step closer)

The next step would be to use the stencil buffer from the scene rendering to create the black and white image instead of rendering the highlighted object.

Help please =)

LikeLike

Some ideas:

1. Draw highlighted objects as red, other things in the scene in blue using replacement shaders

2. Draw the outline but not only discard pixels whose r channel != 0, but also check for b channel, too.

LikeLike

I would like to know the same thing as Andrea : how to occlude the outline should the outlined object be behind another. Basically, I only want to implement a selection outline, not x-rays.

The Unity Wiki tutorial achieves this by commenting out the “Ztest Always” code in the passes, but your own method is architectured differently and I am not too sure where to tweak that feature.

LikeLike

Hey, I just got a black screen after Step 2. Any thoughts?

LikeLike

I think I found the solution. In the SimpleDraw shader on line 18, replace float4 pos:SV_POSITION with float4 pos:POSITION;

LikeLike

Hi, this looks like a really great tutorial, but unfortunately I’m falling at the first hurdle.

I’m using Unity 5.6.2f1 and after setting up Stage 1 I see a solid red screen.

The temporary camera is being created & is showing only objects on the Outline layer, so the problem seems to be with the RenderTexture.

Any ideas?

LikeLike

I’m getting the error: “Assertion failed: Invalid mask passed to GetVertexDeclaration()” on the line Graphics.Blit(TempRT, destination, Post_Mat);

It doesn’t seem to like the Post_Mat for some reason (when I took out just that parameter in blit the error goes away, but it doesn’t have the intended effect anymore)

LikeLike

I was getting just the outline on a black background, I figured at no point are we blitting the original source to the destination, so I added one line

//copy the temporary RT to the final image

Graphics.Blit(source, destination);

Graphics.Blit(TempRT, destination,Post_Mat);

And then it works perfectly with no other changes. Hope that helps anyone who was having the same trouble as me – fantastic tutorial, thanks! An excellent base to work from for what I want to do.

LikeLike

Good tutorial, just wish it was updated as there are a few minor glitches. As stated by Will above, _SceneTex has to be provided to the shader in order to show more than a black screen. The image will then, however be flipped. This is due to two 1-i.uvs.y too many in the final Post Outline shader:

Line 130 should read:

return tex2D(_SceneTex,float2(i.uvs.x,i.uvs.y))

Line 149 should read:

half4 outcolor = ColorIntensityInRadius*half4(0,1,1,1)*2+(1-ColorIntensityInRadius)*tex2D(_SceneTex,float2(i.uvs.x,i.uvs.y));

Do keep the 1-i.uvs.y on line 139 though, otherwise the outline will be flipped.

LikeLike

How do we achieve the occlusion of the outline if an object is in front of the outlined object?

LikeLike

I don’t know it’s a good idea, but it can be solved by installing three cameras.

1 : Background camera

2 : The Highlight Camera

3 : Object camera

LikeLike

Actually, this is not a good idea. You’ll need to invest time into keeping all cameras consistent and synchronized with each other, on top of any other rendering overhead that comes with a camera.

Still, you’re essentially following what’s going on! The utility in using OnRenderImage() is that you can intercept the right points of the rendering pipeline on a single camera, and then you can easily apply the script to any camera in any scene without doing weird camera setups 🙂

LikeLike

Solid tutorial – thanks!

My main issue in the end was the render texture being inverted on the Y axis, which I found comes down to a cross platform rendering issue, adding this code into the top of the fragment function in the PostOutline.shader fixes it:

#if UNITY_UV_STARTS_AT_TOP

if (_MainTex_TexelSize.y > 0)

i.uvs.y = 1 – i.uvs.y;

#endif

Solution from here: https://docs.unity3d.com/Manual/SL-PlatformDifferences.html

Will look in future at working out how to occlude things that move in front but for now it does exactly what I need.

LikeLiked by 1 person

If I could pin this comment, I would

LikeLiked by 1 person

Hi,

For me, the outline is working, but nothing else. I just have some outlines on a black screen. Similar to what Adam Clements saw. I tried his double Blit idea, but it didn’t solve the problem.

Any idea what I am doing wrong? Thanks!

LikeLike

Update: I was able to get it working by trying some of the other stuff explained above. However, I am now having the issue that Alex ADEdge was talking about where the outline seems to be offset (inverted) along the Y axis.

I tried adding his code snippit to the “top of the fragment function” in PostOutline.shader. However, I’m not sure where to put it. There are 2 fragment functions, if I put it in the top one, it seems to cull the wrong pixels. If I put it in the bottom one, I also need to add the float2 _MainTex_TexelSize; and it appears to have no effect.

Any help would be much appreciated!

LikeLike

One more update… I’m starting to wonder if this system is working fine and the source, being fed into Post_Mat via this line: Post_Mat.SetTexture(“_SceneTex”, source); … is inverting the SOURCE image.

LikeLike

Final update. Sorry for the spam. I was able to figure it out.

_SceneTex was being inverted in the shader, and yet the selection outline was not. I was able to fix it by removing the 1-i wherever the _SceneTex was being used.

LikeLike

Awesome work Will! Appreciate that!

@Xib added solution to black screen with blured ver. and together you helped me a lot!

Thank you two! ^^

LikeLike